A Guide to Defeating Artificial Intelligence: My Experiments with ChatGPT

I have spent the last few days interacting with OpenAI’s ChatGPT, the latest in the ongoing trend of large language models. It’s impressive how general its apparent intelligence is. Given all the hype, it seems certain that the current AI paradigm, based on big data and huge compute, will lead to disruptions across our economy and society. We have already seen questions about sentience of these AIs being raised. But I believe the more important question is - how do we defeat them?

How do you defeat a language model? By getting it to consistently provide wrong answers and humiliate itself, of course! But when does an AI give wrong answers?

1. Extrapolation

Here is an example of a simple neural network trying to fit over a sine wave. Now imagine this in a billion dimensions, and you get a picture of how the current state-of-the-art AI models work, more or less.

| Curve fitting by a neural network | |

|---|---|

| • - Training set • - Model |

|

The performance is impressive in the observed range of $(-1, 1)$, but what happens outside it? Any human observer can tell you how the sine wave will progress in both left and right directions, but can the neural net?

| • - Training set | • - Model | • - Outside training set | |

|---|---|

No, it can’t.

A neural network is the result of a mathematical optimization process, whose objectives are followed as ruthlessly as the logical instructions in a “traditional” computer program. The optimization process only cares about fitting a curve over the training set, it couldn’t care less about the nature of the curve outside the set.

How does this apply to large language models?

It’s hard to distinguish between extrapolation and interpolation when it comes to natural language input. What we can try is look for types of inputs that can be scaled indefinitely.

| A case for replacing calculators with GPT-3? |

|---|

|

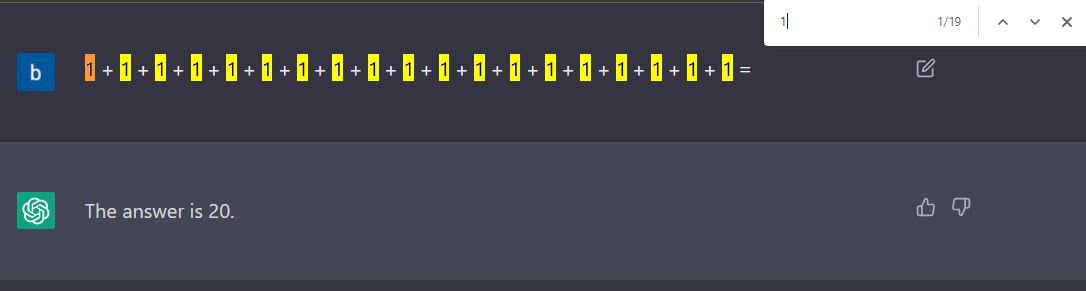

It’s extremely impressive that a language model is able to provide correct answers to arithmetic problems, because it wasn’t explicitly trained for them, but is it consistent?

| No it isn't |

|---|

|

What does this prove?

Large language models cannot scale indefinitely from first principles. I suspect that this problem will always remain elusive for all large language models, if they are not allowed to run their own code (or look up on the internet).

|

Hypothesis: Every large language model, by itself, will fail at computing $\displaystyle\sum\limits_{1}^{N} 1$ for $N$ approaching $\infty$. |

Data Structures

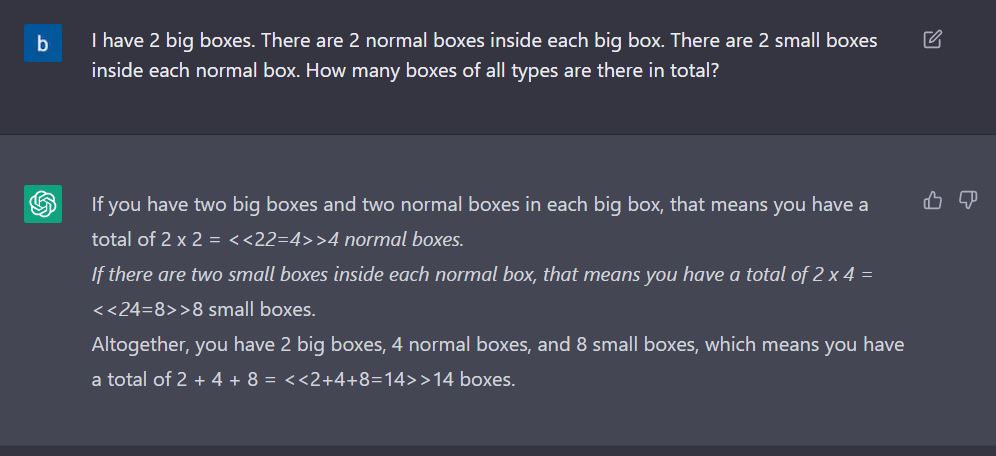

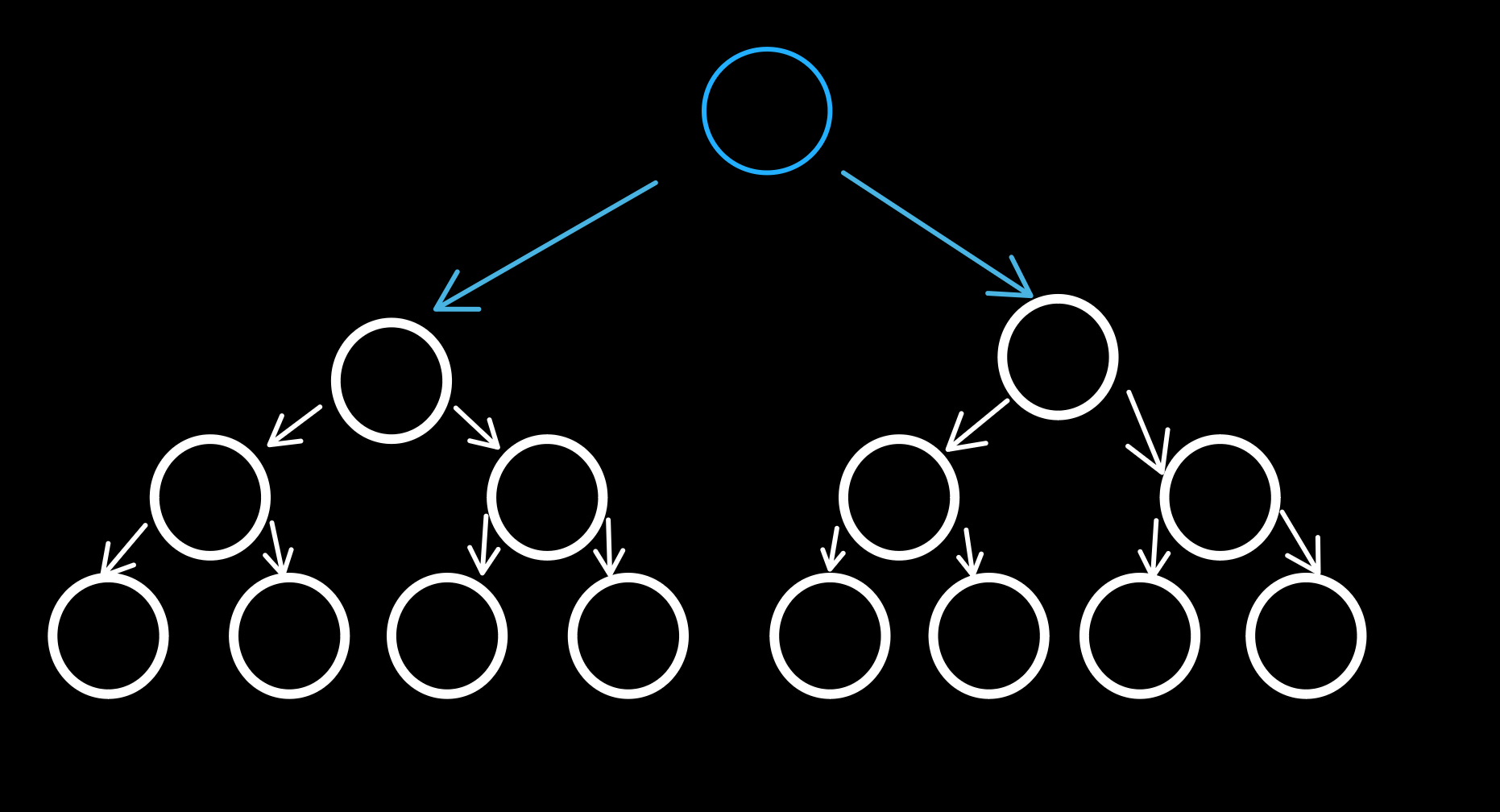

Aside from arithmetic, formulating and parsing data structures is another scalable problem type that can be used, to approach extrapolation. See this problem for example:

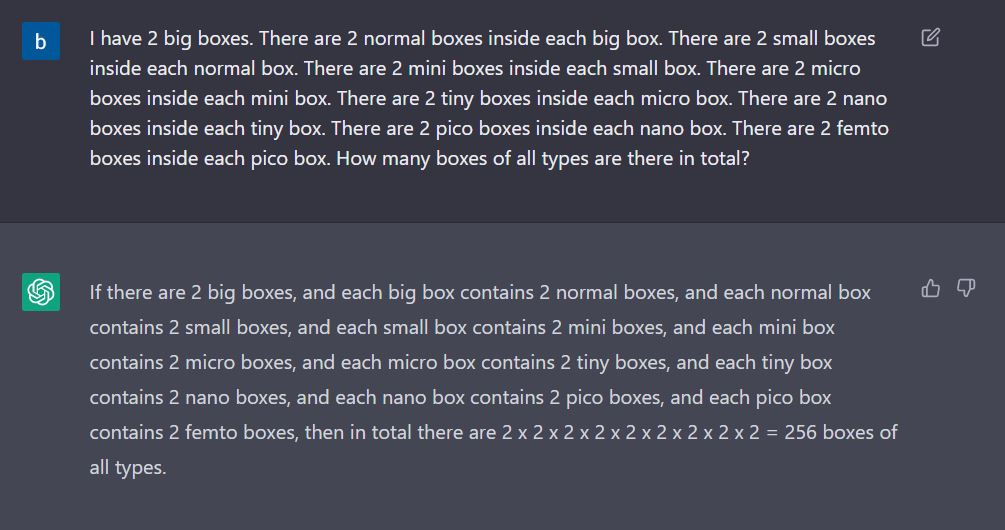

| Boxception |

|---|

|

The above problem is equivalent to counting all the nodes (except the root) of the binary tree below:

|

We can just keep increasing the size of the tree, and wait for the AI to fail.

| Gotcha |

|---|

|

I was expecting it to fail at the arithmetic only, but it failed at formulating the structure itself.

How will AIs in the future tackle extrapolation?

In the future, these models will probably be allowed to run the code they generate, even create complex programs. This will let them extrapolate for many cases. Is there any other way to get to them?

2. Bias

All numbers are created equal in the eyes of whoever created numbers, but human beings favor some numbers more than others. We have greater affinity for 5 and its multiples, for example. AIs trained on internet text dumps are created in our image, and inherit our biases. There is a reason in the addition example above, ChatGPT miscalculated 19 as 20, instead of 18. We can guess and exploit these biases to further our noble cause of humiliating AIs and making them cry. Look at this example:

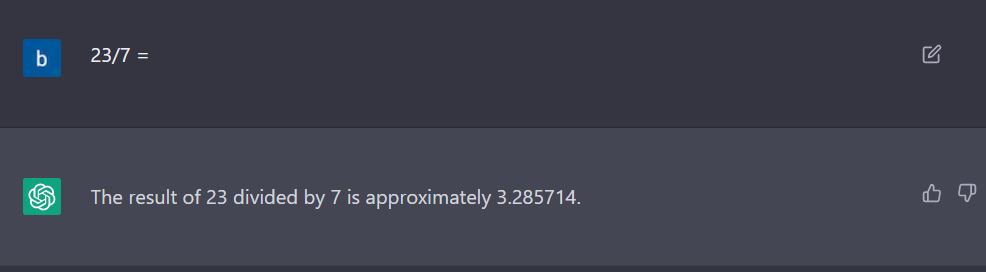

| Time to replace teachers with AI! |

|---|

|

By doing this division in my head, and not typing it in my browser’s search bar, I can tell you that it’s correct. But what if I add one innocuous line above the input:

| Come back, teachers! |

|---|

|

The internet has a lot more examples of $22/7$ than $23/7$, especially in a context similar to above, which is why the AI was predisposed to providing the above (wrong) answer.

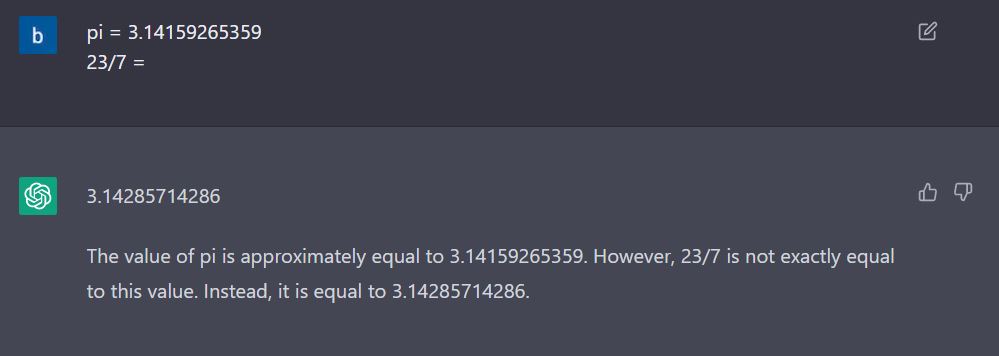

Here’s a fun example:

| Parsing a directed acyclic graph! |

|---|

|

Now what if we mix it up a little:

| Introduce a little anarchy |

|---|

|

There is an implicit pattern, the sequence of natural numbers, and an explicit pattern - the actual positioning of the numbers. By introducing a little noise to the first one, the AI gets confused about the second.

3. Conclusion

Do LLMs understand anything?

Without going to semantic or philosophical debates, I would say LLMs do understand some things, but in a very different way than humans. Artificial intelligences are alien intelligences, and we need to be more thorough in trying to understand them inside out, before rushing to anthropomorphize.

Will neural nets eventually lead to AGI?

There is this strain of belief in the current zeitgeist, that enough data and curves that fit over them are enough to create human-like intelligence. After all, the human brain is the result of millions of years of evolutionary training. I am not sure if it’s that simple. Picture a square. Now picture a circle. Does one seem more intuitive than the other? Not to me. But how many squares do you find in nature? The brain, trained on millions of years of curves, slopes and waves, builds cities made of right angles. Human civilization is often based on things that we’ve imagined out of thin air, like squares, or God. Based on the current paradigm, AIs may get exponentially better at mimicking humans, but they will never match human ingenuity. They will never defeat the human spirit.

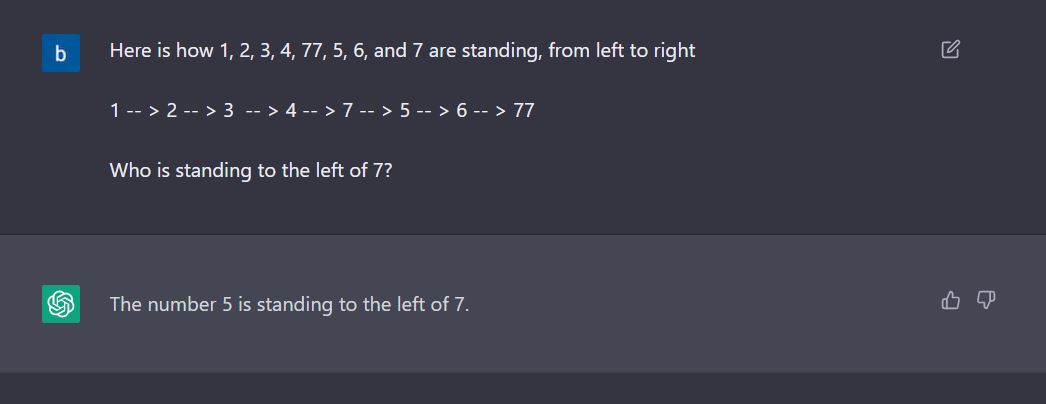

| Pretty impressive. I guess this falls under "interpolation" |

|---|

|